AWS Docker Walkthrough with ElasticBeanstalk: Part 2

Sun Jun 28, 2015 in amazon , aws , elasticbeanstalk , docker , ecs , cloudformation , ebextensions using tagsWhile deploying docker containers for immutable infrastructure on AWS ElasticBeanstalk, I’ve learned a number of useful tricks that go beyond the official Amazon documentation.

This series of posts are an attempt to summarize some of the useful bits that may benefit others facing the same challenges.

Previously: Part 1 : Preparing a VPC for your ElasticBeanstalk environments

Part 2 : Creating your ElasticBeanstalk environment

Step 1: Create your Application in AWS

Each AWS account needs to have your ElasticBeanstalk application defined initially.

Operationally, there are few reasons to remove an application from an AWS account, so there’s a good bet it’s already there.

aws elasticbeanstalk create-application \

--profile aws-dev \

--region us-east-1 \

--application-name myapp \

--description 'My Application'

You should really only ever have to do this once per AWS account.

There is an example of this in the Makefile as the make application target.

Step 2 : Update your AWS development environment.

During our initial VPC creation, we used the aws command from the awscli python package.

When deploying ElasticBeanstalk applications, we use the eb command from the awsebcli python package.

On OS/X, we run:

brew install awsebcli

On Windows, chocolatey doesn’t have awsebcli, but we can install python pip:

choco install pip

Again, because awsebcli is a python tool, we can install with:

pip install awscli

You may (or may not) need to prefix that pip install with sudo on linux/unix flavors, depending. ie:

sudo pip install awsebcli

These tools will detect if they are out of date when you run them. You may eventually get a message like:

Alert: An update to this CLI is available.

When this happens, you will likely want to either upgrade via homebrew:

brew update & brew upgrade awsebcli

or, more likely, upgrade using pip directly:

pip install --upgrade awsebcli

Again, you may (or may not) need to prefix that pip install with sudo, depending. ie:

sudo pip install --upgrade awsebcli

There really should be an awsebcli Docker image, but there presently is not. Add that to the list of images to build.

Step 3: Create a ssh key pair to use

Typically you will want to generate an ssh key locally and upload the public part:

ssh-keygen -t rsa -b 2048 -f ~/.ssh/myapp-dev -P ''

aws ec2 import-key-pair --key-name myapp-dev --public-key-material "$(cat ~/.ssh/myapp-dev.pub)"

Alternatively, if you are on a development platform without ssh-keygen for some reason, you can have AWS generate it for you:

aws ec2 create-key-pair --key-name myapp-dev > ~/.ssh/myapp-dev

The downside to the second method is that AWS has the private key (as they generated it, and you shipped it via https over the network to your local machine), whereas in the first example they do not.

This ssh key can be used to access AWS instances directly.

After creating this ssh key, it is probably a good idea that you add it to your team’s password management tool (Keepass, Hashicorp Vault, Trousseau, Ansible Vault, Chef Encrypted Databags, LastPass, 1Password, Dashlane, etc) so that the private key isn’t only on your development workstation in your local user account.

Note the naming convention of ~/.ssh/$(PROJECT)-$(ENVIRONMENT) - this is the default key filename that eb ssh will use.

If you do not use the above naming convention, you will have to add the generated ssh private key to your ssh-agent’s keychain in order to use it:

[ -n $SSH_AUTH_SOCK ] || eval $(ssh-agent)

ssh-add ~/.ssh/myapp-dev

To list the ssh keys in your keychain, use:

ssh-add -l

So long as you see 4 or fewer keys, including they key you created above, you should be ok.

If you have more than 4 keys listed in your ssh-agent keychain, depending on the order they are tried by your ssh client, that may exceed the default number of ssh key retries allowed on the remote sshd server side, which will prevent you from connecting.

Now we should have an ssh key pair defined in AWS that we can use when spinning up instances.

Step 4: Initialize your local development directory for the eb cli

Before using the eb command, you must eb init your project to create a .elasticbeanstalk/config.yml file:

eb init --profile aws-dev

The --profile aws-dev is optional, if you created profiles in your ~/.aws/config file. If you are using AWS environment variables your your ACCESS/SECRET keys, or only one default AWS account, you may omit that.

The application must exist in AWS first, which is why this is run after the previous step of creating the Application in AWS.

You may be prompted for some critical bits:

$ eb init --profile aws-dev

eb init --profile aws-dev

Select a default region

1) us-east-1 : US East (N. Virginia)

2) us-west-1 : US West (N. California)

3) us-west-2 : US West (Oregon)

4) eu-west-1 : EU (Ireland)

5) eu-central-1 : EU (Frankfurt)

6) ap-southeast-1 : Asia Pacific (Singapore)

7) ap-southeast-2 : Asia Pacific (Sydney)

8) ap-northeast-1 : Asia Pacific (Tokyo)

9) sa-east-1 : South America (Sao Paulo)

(default is 3): 1

Select an application to use

1) myapp

2) [ Create new Application ]

(default is 2): 1

Select a platform.

1) PHP

2) Node.js

3) IIS

4) Tomcat

5) Python

6) Ruby

7) Docker

8) Multi-container Docker

9) GlassFish

10) Go

(default is 1): 7

Select a platform version.

1) Docker 1.6.2

2) Docker 1.6.0

3) Docker 1.5.0

(default is 1): 1

Do you want to set up SSH for your instances?

(y/n): y

Select a keypair.

1) myapp-dev

2) [ Create new KeyPair ]

(default is 2): 1

Alternatively, to avoid the questions, you can specify the full arguments:

eb init myapp --profile aws-dev --region us-east-1 -p 'Docker 1.6.2' -k myapp-dev

The end result is a .elasticbeanstalk/config.yml that will look something like this:

branch-defaults:

master:

environment: null

global:

application_name: myapp

default_ec2_keyname: myapp-dev

default_platform: Docker 1.6.2

default_region: us-east-1

profile: aws-dev

sc: git

Any field appearing as null will likely need some manual attention from you after the next step.

Step 5: Create the ElasticBeanstalk Environment

Previously, in Part 1 : Preparing a VPC for your ElasticBeanstalk environments, we generated a VPC using a CloudFormation with an output of the Subnets and Security Group. We will need those things below.

Here is a repeat of that earlier snippet:

aws cloudformation describe-stacks --stack-name myapp-dev --profile aws-dev --region us-east-1 | jq -r '.Stacks[].Outputs'

[

{

"Description": "VPC Id",

"OutputKey": "VpcId",

"OutputValue": "vpc-b7d1d8d2"

},

{

"Description": "VPC",

"OutputKey": "VPCDefaultNetworkAcl",

"OutputValue": "acl-b3cfc7d6"

},

{

"Description": "VPC Default Security Group that we blissfully ignore thanks to self-referencing bugs",

"OutputKey": "VPCDefaultSecurityGroup",

"OutputValue": "sg-3e50a559"

},

{

"Description": "VPC Security Group created by this stack",

"OutputKey": "VPCSecurityGroup",

"OutputValue": "sg-0c50a56b"

},

{

"Description": "The subnet id for VPCSubnet0",

"OutputKey": "VPCSubnet0",

"OutputValue": "subnet-995236b2"

},

{

"Description": "The subnet id for VPCSubnet1",

"OutputKey": "VPCSubnet1",

"OutputValue": "subnet-6aa4fd1d"

},

{

"Description": "The subnet id for VPCSubnet2",

"OutputKey": "VPCSubnet2",

"OutputValue": "subnet-ad3644f4"

},

{

"Description": "The IAM instance profile for EC2 instances",

"OutputKey": "InstanceProfile",

"OutputValue": "myapp-dev-InstanceProfile-1KCQJP9M5TSVZ"

}

]

There are two ways to create a new ElasticBeanstalk environment:

- Using

eb createwith full arguments for the various details of the environment. - Using

eb createwith a--cfgargument of a previouseb config saveto a YAML file in.elasticbeanstalk/saved_configs.

The first way looks something like this:

eb create myapp-dev --verbose \

--profile aws-dev \

--tier WebServer \

--cname myapp-dev \

-p '64bit Amazon Linux 2015.03 v1.4.3 running Docker 1.6.2' \

-k myapp-dev \

-ip myapp-dev-InstanceProfile-1KCQJP9M5TSVZ \

--tags Project=myapp,Environment=dev \

--envvars DEBUG=info \

--vpc.ec2subnets=subnet-995236b2,subnet-6aa4fd1d,subnet-ad3644f4 \

--vpc.elbsubnets=subnet-995236b2,subnet-6aa4fd1d,subnet-ad3644f4 \

--vpc.publicip --vpc.elbpublic --vpc.securitygroups=sg-0c50a56b

The Makefile has an environment target that removes the need to fill in the fields manually:

outputs:

@which jq > /dev/null 2>&1 || ( which brew && brew install jq || which apt-get && apt-get install jq || which yum && yum install jq || which choco && choco install jq)

@aws cloudformation describe-stacks --stack-name myapp-dev --profile aws-dev --region us-east-1 | jq -r '.Stacks[].Outputs | map({key: .OutputKey, value: .OutputValue}) | from_entries'

environment:

eb create $(STACK) --verbose \

--profile aws-dev \

--tier WebServer \

--cname $(shell whoami)-$(STACK) \

-p '64bit Amazon Linux 2015.03 v1.4.3 running Docker 1.6.2' \

-k $(STACK) \

-ip $(shell make outputs | jq -r .InstanceProfile) \

--tags Project=$(PROJECT),Environment=$(ENVIRONMENT) \

--envvars DEBUG=info \

--vpc.ec2subnets=$(shell make outputs | jq -r '[ .VPCSubnet0, .VPCSubnet1, .VPCSubnet2 ] | @csv') \

--vpc.elbsubnets=$(shell make outputs | jq -r '[ .VPCSubnet0, .VPCSubnet1, .VPCSubnet2 ] | @csv') \

--vpc.publicip --vpc.elbpublic \

--vpc.securitygroups=$(shell make outputs | jq -r .VPCSecurityGroup)

On the other hand, after a quick config save:

eb config save myapp-dev --profile aws-dev --region us-east-1 --cfg myapp-dev-sc

We now have the above settings in a YAML file .elasticbeanstalk/saved_configs/myapp-dev-sc.cfg.yml which can be committed to our git project.

This leads to the second way to create an ElasticBeanstalk environment:

eb create myapp-dev --cname myapp-dev --cfg myapp-dev-sc --profile aws-dev

The flip side of that is the YAML save config has static values embedded in it for a specific deployed VPC.

More docker goodness to come in Part 3…

AWS Docker Walkthrough with ElasticBeanstalk: Part 1

Sat Jun 27, 2015 in amazon , aws , elasticbeanstalk , docker , ecs , cloudformation ebextensions using tagsWhile deploying docker containers for immutable infrastructure on AWS ElasticBeanstalk, I’ve learned a number of useful tricks that go beyond the official Amazon documentation.

This series of posts are an attempt to summarize some of the useful bits that may benefit others facing the same challenges.

Part 1 : Preparing a VPC for your ElasticBeanstalk environments

Step 1 : Prepare your AWS development environment.

On OS/X, I install homebrew, and then:

brew install awscli

On Windows, I install chocolatey and then:

choco install awscli

Because awscli is a python tool, on either of these, or on the various Linux distribution flavors, we can also avoid native package management and alternatively use python easyinstall or pip directly:

pip install awscli

You may (or may not) need to prefix that pip install with sudo, depending. ie:

sudo pip install awscli

These tools will detect if they are out of date when you run them. You may eventually get a message like:

Alert: An update to this CLI is available.

When this happens, you will likely want to either upgrade via homebrew:

brew update & brew upgrade awscli

or, more likely, upgrade using pip directly:

pip install --upgrade awscli

Again, you may (or may not) need to prefix that pip install with sudo, depending. ie:

sudo pip install --upgrade awscli

For the hardcore Docker fans out there, this is pretty trivial to run as a container as well. See CenturyLinkLabs/docker-aws-cli for a good example of that. Managing an aws config file requires volume mapping, or passing -e AWS_ACCESS_KEY_ID={redacted} -e AWS_SECRET_ACCESS_KEY={redacted}. There are various guides to doing this out there. This will not be one of them ;)

Step 2: Prepare your AWS environment variables

If you haven’t already, prepare for AWS cli access.

You can now configure your ~/.aws/config by running:

aws configure

This will create a default configuration.

I’ve yet to work with any company with only one AWS account though. You will likely find that you need to support managing multiple AWS configuration profiles.

Here’s an example ~/.aws/config file with multiple profiles:

[default]

output = json

region = us-east-1

[profile aws-dev]

AWS_ACCESS_KEY_ID={REDACTED}

AWS_SECRET_ACCESS_KEY={REDACTED}

[profile aws-prod]

AWS_ACCESS_KEY_ID={REDACTED}

AWS_SECRET_ACCESS_KEY={REDACTED}

You can create this by running:

$ aws configure --profile aws-dev

AWS Access Key ID [REDACTED]: YOURACCESSKEY

AWS Secret Access Key [REDACTED]: YOURSECRETKEY

Default region name [None]: us-east-1

Default output format [None]: json

Getting in the habit of specifying --profile aws-dev is a bit of a reassurance that you’re provisioning resources into the correct AWS account, and not sullying AWS cloud resources between VPC environments.

Step 3: Preparing a VPC

Deploying anything to AWS EC2 Classic instances these days is to continue down the path of legacy maintenance.

For new ElasticBeanstalk deployments, a VPC should be used.

The easiest/best way to deploy a VPC is to use a CloudFormation template.

Below is a VPC CloudFormation that I use for deployment:

{

"AWSTemplateFormatVersion": "2010-09-09",

"Description": "MyApp VPC",

"Parameters" : {

"Project" : {

"Description" : "Project name to tag resources with",

"Type" : "String",

"MinLength": "1",

"MaxLength": "16",

"AllowedPattern" : "[a-z]*",

"ConstraintDescription" : "any alphabetic string (1-16) characters in length"

},

"Environment" : {

"Description" : "Environment name to tag resources with",

"Type" : "String",

"AllowedValues" : [ "dev", "qa", "prod" ],

"ConstraintDescription" : "must be one of dev, qa, or prod"

},

"SSHFrom": {

"Description" : "Lockdown SSH access (default: can be accessed from anywhere)",

"Type" : "String",

"MinLength": "9",

"MaxLength": "18",

"Default" : "0.0.0.0/0",

"AllowedPattern" : "(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})/(\\d{1,2})",

"ConstraintDescription" : "must be a valid CIDR range of the form x.x.x.x/x."

},

"VPCNetworkCIDR" : {

"Description": "The CIDR block for the entire VPC network",

"Type": "String",

"AllowedPattern" : "(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})/(\\d{1,2})",

"Default": "10.114.0.0/16",

"ConstraintDescription" : "must be an IPv4 dotted quad plus slash plus network bit length in CIDR format"

},

"VPCSubnet0CIDR" : {

"Description": "The CIDR block for VPC subnet0 segment",

"Type": "String",

"AllowedPattern" : "(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})/(\\d{1,2})",

"Default": "10.114.0.0/24",

"ConstraintDescription" : "must be an IPv4 dotted quad plus slash plus network bit length in CIDR format"

},

"VPCSubnet1CIDR" : {

"Description": "The CIDR block for VPC subnet1 segment",

"Type": "String",

"AllowedPattern" : "(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})/(\\d{1,2})",

"Default": "10.114.1.0/24",

"ConstraintDescription" : "must be an IPv4 dotted quad plus slash plus network bit length in CIDR format"

},

"VPCSubnet2CIDR" : {

"Description": "The CIDR block for VPC subnet2 segment",

"Type": "String",

"AllowedPattern" : "(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})\\.(\\d{1,3})/(\\d{1,2})",

"Default": "10.114.2.0/24",

"ConstraintDescription" : "must be an IPv4 dotted quad plus slash plus network bit length in CIDR format"

}

},

"Resources" : {

"VPC" : {

"Type" : "AWS::EC2::VPC",

"Properties" : {

"EnableDnsSupport" : "true",

"EnableDnsHostnames" : "true",

"CidrBlock" : { "Ref": "VPCNetworkCIDR" },

"Tags" : [

{ "Key" : "Name", "Value" : { "Fn::Join": [ "-", [ "vpc", { "Ref": "Project" }, { "Ref" : "Environment" } ] ] } },

{ "Key" : "Project", "Value" : { "Ref": "Project" } },

{ "Key" : "Environment", "Value" : { "Ref": "Environment" } }

]

}

},

"VPCSubnet0" : {

"Type" : "AWS::EC2::Subnet",

"Properties" : {

"VpcId" : { "Ref" : "VPC" },

"AvailabilityZone": { "Fn::Select" : [ 0, { "Fn::GetAZs" : "" } ] },

"CidrBlock" : { "Ref": "VPCSubnet0CIDR" },

"Tags" : [

{ "Key" : "Name", "Value" : { "Fn::Join": [ "-", [ "subnet", { "Ref": "Project" }, { "Ref": "Environment" } ] ] } },

{ "Key" : "AZ", "Value" : { "Fn::Select" : [ 0, { "Fn::GetAZs" : "" } ] } },

{ "Key" : "Project", "Value" : { "Ref": "Project" } },

{ "Key" : "Environment", "Value" : { "Ref": "Environment" } }

]

}

},

"VPCSubnet1" : {

"Type" : "AWS::EC2::Subnet",

"Properties" : {

"VpcId" : { "Ref" : "VPC" },

"AvailabilityZone": { "Fn::Select" : [ 1, { "Fn::GetAZs" : "" } ] },

"CidrBlock" : { "Ref": "VPCSubnet1CIDR" },

"Tags" : [

{ "Key" : "Name", "Value" : { "Fn::Join": [ "-", [ "subnet", { "Ref": "Project" }, { "Ref": "Environment" } ] ] } },

{ "Key" : "AZ", "Value" : { "Fn::Select" : [ 1, { "Fn::GetAZs" : "" } ] } },

{ "Key" : "Project", "Value" : { "Ref": "Project" } },

{ "Key" : "Environment", "Value" : { "Ref": "Environment" } }

]

}

},

"VPCSubnet2" : {

"Type" : "AWS::EC2::Subnet",

"Properties" : {

"VpcId" : { "Ref" : "VPC" },

"AvailabilityZone": { "Fn::Select" : [ 2, { "Fn::GetAZs" : "" } ] },

"CidrBlock" : { "Ref": "VPCSubnet2CIDR" },

"Tags" : [

{ "Key" : "Name", "Value" : { "Fn::Join": [ "-", [ "subnet", { "Ref": "Project" }, { "Ref": "Environment" } ] ] } },

{ "Key" : "AZ", "Value" : { "Fn::Select" : [ 2, { "Fn::GetAZs" : "" } ] } },

{ "Key" : "Project", "Value" : { "Ref": "Project" } },

{ "Key" : "Environment", "Value" : { "Ref": "Environment" } }

]

}

},

"InternetGateway" : {

"Type" : "AWS::EC2::InternetGateway",

"Properties" : {

"Tags" : [

{ "Key" : "Name", "Value" : { "Fn::Join": [ "-", [ "igw", { "Ref": "Project" }, { "Ref": "Environment" } ] ] } },

{ "Key" : "Project", "Value" : { "Ref": "Project" } },

{ "Key" : "Environment", "Value" : { "Ref": "Environment" } }

]

}

},

"GatewayToInternet" : {

"Type" : "AWS::EC2::VPCGatewayAttachment",

"Properties" : {

"VpcId" : { "Ref" : "VPC" },

"InternetGatewayId" : { "Ref" : "InternetGateway" }

}

},

"PublicRouteTable" : {

"Type" : "AWS::EC2::RouteTable",

"DependsOn" : "GatewayToInternet",

"Properties" : {

"VpcId" : { "Ref" : "VPC" },

"Tags" : [

{ "Key" : "Name", "Value" : { "Fn::Join": [ "-", [ "route", { "Ref": "Project" }, { "Ref" : "Environment" } ] ] } },

{ "Key" : "Project", "Value" : { "Ref": "Project" } },

{ "Key" : "Environment", "Value" : { "Ref": "Environment" } }

]

}

},

"PublicRoute" : {

"Type" : "AWS::EC2::Route",

"DependsOn" : "GatewayToInternet",

"Properties" : {

"RouteTableId" : { "Ref" : "PublicRouteTable" },

"DestinationCidrBlock" : "0.0.0.0/0",

"GatewayId" : { "Ref" : "InternetGateway" }

}

},

"VPCSubnet0RouteTableAssociation" : {

"Type" : "AWS::EC2::SubnetRouteTableAssociation",

"Properties" : {

"SubnetId" : { "Ref" : "VPCSubnet0" },

"RouteTableId" : { "Ref" : "PublicRouteTable" }

}

},

"VPCSubnet1RouteTableAssociation" : {

"Type" : "AWS::EC2::SubnetRouteTableAssociation",

"Properties" : {

"SubnetId" : { "Ref" : "VPCSubnet1" },

"RouteTableId" : { "Ref" : "PublicRouteTable" }

}

},

"VPCSubnet2RouteTableAssociation" : {

"Type" : "AWS::EC2::SubnetRouteTableAssociation",

"Properties" : {

"SubnetId" : { "Ref" : "VPCSubnet2" },

"RouteTableId" : { "Ref" : "PublicRouteTable" }

}

},

"InstanceRole": {

"Type": "AWS::IAM::Role",

"Properties": {

"AssumeRolePolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": [ "ec2.amazonaws.com" ]

},

"Action": [ "sts:AssumeRole" ]

}

]

},

"Path": "/",

"Policies": [

{

"PolicyName": "ApplicationPolicy",

"PolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"elasticbeanstalk:*",

"elastiCache:*",

"ec2:*",

"elasticloadbalancing:*",

"autoscaling:*",

"cloudwatch:*",

"dynamodb:*",

"s3:*",

"sns:*",

"sqs:*",

"cloudformation:*",

"rds:*",

"iam:AddRoleToInstanceProfile",

"iam:CreateInstanceProfile",

"iam:CreateRole",

"iam:PassRole",

"iam:ListInstanceProfiles"

],

"Resource": "*"

}

]

}

}

]

}

},

"InstanceProfile": {

"Type": "AWS::IAM::InstanceProfile",

"Properties": {

"Path": "/",

"Roles": [ { "Ref": "InstanceRole" } ]

}

},

"VPCSecurityGroup" : {

"Type" : "AWS::EC2::SecurityGroup",

"Properties" : {

"GroupDescription" : { "Fn::Join": [ "", [ "VPC Security Group for ", { "Fn::Join": [ "-", [ { "Ref": "Project" }, { "Ref": "Environment" } ] ] } ] ] },

"SecurityGroupIngress" : [

{"IpProtocol": "tcp", "FromPort" : "22", "ToPort" : "22", "CidrIp" : { "Ref" : "SSHFrom" }},

{"IpProtocol": "tcp", "FromPort": "80", "ToPort": "80", "CidrIp": "0.0.0.0/0" },

{"IpProtocol": "tcp", "FromPort": "443", "ToPort": "443", "CidrIp": "0.0.0.0/0" }

],

"VpcId" : { "Ref" : "VPC" },

"Tags" : [

{ "Key" : "Name", "Value" : { "Fn::Join": [ "-", [ "sg", { "Ref": "Project" }, { "Ref" : "Environment" } ] ] } },

{ "Key" : "Project", "Value" : { "Ref": "Project" } },

{ "Key" : "Environment", "Value" : { "Ref": "Environment" } }

]

}

},

"VPCSGIngress": {

"Type": "AWS::EC2::SecurityGroupIngress",

"Properties": {

"GroupId": { "Ref" : "VPCSecurityGroup" },

"IpProtocol": "-1",

"FromPort": "0",

"ToPort": "65535",

"SourceSecurityGroupId": { "Ref": "VPCSecurityGroup" }

}

}

},

"Outputs" : {

"VpcId" : {

"Description" : "VPC Id",

"Value" : { "Ref" : "VPC" }

},

"VPCDefaultNetworkAcl" : {

"Description" : "VPC",

"Value" : { "Fn::GetAtt" : ["VPC", "DefaultNetworkAcl"] }

},

"VPCDefaultSecurityGroup" : {

"Description" : "VPC Default Security Group that we blissfully ignore thanks to self-referencing bugs",

"Value" : { "Fn::GetAtt" : ["VPC", "DefaultSecurityGroup"] }

},

"VPCSecurityGroup" : {

"Description" : "VPC Security Group created by this stack",

"Value" : { "Ref": "VPCSecurityGroup" }

},

"VPCSubnet0": {

"Description": "The subnet id for VPCSubnet0",

"Value": {

"Ref": "VPCSubnet0"

}

},

"VPCSubnet1": {

"Description": "The subnet id for VPCSubnet1",

"Value": {

"Ref": "VPCSubnet1"

}

},

"VPCSubnet2": {

"Description": "The subnet id for VPCSubnet2",

"Value": {

"Ref": "VPCSubnet2"

}

}

}

}

Here is an example CloudFormation parameters file for this template:

[

{ "ParameterKey": "Project", "ParameterValue": "myapp" },

{ "ParameterKey": "Environment", "ParameterValue": "dev" },

{ "ParameterKey": "VPCNetworkCIDR", "ParameterValue": "10.0.0.0/16" },

{ "ParameterKey": "VPCSubnet0CIDR", "ParameterValue": "10.0.0.0/24" },

{ "ParameterKey": "VPCSubnet1CIDR", "ParameterValue": "10.0.1.0/24" },

{ "ParameterKey": "VPCSubnet2CIDR", "ParameterValue": "10.0.2.0/24" }

]

To script the creation, updating, watching, and deleting of the CloudFormation VPC, I have this Makefile as well:

STACK:=myapp-dev

TEMPLATE:=cloudformation-template_vpc-iam.json

PARAMETERS:=cloudformation-parameters_myapp-dev.json

AWS_REGION:=us-east-1

AWS_PROFILE:=aws-dev

all:

@which aws || pip install awscli

aws cloudformation create-stack --stack-name $(STACK) --template-body file://`pwd`/$(TEMPLATE) --parameters file://`pwd`/$(PARAMETERS) --capabilities CAPABILITY_IAM --profile $(AWS_PROFILE) --region $(AWS_REGION)

update:

aws cloudformation update-stack --stack-name $(STACK) --template-body file://`pwd`/$(TEMPLATE) --parameters file://`pwd`/$(PARAMETERS) --capabilities CAPABILITY_IAM --profile $(AWS_PROFILE) --region $(AWS_REGION)

events:

aws cloudformation describe-stack-events --stack-name $(STACK) --profile $(AWS_PROFILE) --region $(AWS_REGION)

watch:

watch --interval 10 "bash -c 'make events | head -25'"

output:

@which jq || ( which brew && brew install jq || which apt-get && apt-get install jq || which yum && yum install jq || which choco && choco install jq)

aws cloudformation describe-stacks --stack-name $(STACK) --profile $(AWS_PROFILE) --region $(AWS_REGION) | jq -r '.Stacks[].Outputs'

delete:

aws cloudformation delete-stack --stack-name $(STACK) --profile $(AWS_PROFILE) --region $(AWS_REGION)

You can get these same files by cloning my github project, and ssuming you have a profile named aws-dev as mentioned above, you can even run make and have it create the myapp-dev VPC via CloudFormation:

git clone https://github.com/ianblenke/aws-docker-walkthrough

cd aws-docker-walkthrough

make

You can run make watch to watch the CloudFormation events and wait for a CREATE_COMPLETE state.

When this is complete, you can see the CloudFormation outputs by running:

make output

The output will look something like this:

aws cloudformation describe-stacks --stack-name myapp-dev --profile aws-dev --region us-east-1 | jq -r '.Stacks[].Outputs'

[

{

"Description": "VPC Id",

"OutputKey": "VpcId",

"OutputValue": "vpc-b7d1d8d2"

},

{

"Description": "VPC",

"OutputKey": "VPCDefaultNetworkAcl",

"OutputValue": "acl-b3cfc7d6"

},

{

"Description": "VPC Default Security Group that we blissfully ignore thanks to self-referencing bugs",

"OutputKey": "VPCDefaultSecurityGroup",

"OutputValue": "sg-3e50a559"

},

{

"Description": "VPC Security Group created by this stack",

"OutputKey": "VPCSecurityGroup",

"OutputValue": "sg-0c50a56b"

},

{

"Description": "The subnet id for VPCSubnet0",

"OutputKey": "VPCSubnet0",

"OutputValue": "subnet-995236b2"

},

{

"Description": "The subnet id for VPCSubnet1",

"OutputKey": "VPCSubnet1",

"OutputValue": "subnet-6aa4fd1d"

},

{

"Description": "The subnet id for VPCSubnet2",

"OutputKey": "VPCSubnet2",

"OutputValue": "subnet-ad3644f4"

},

{

"Description": "The IAM instance profile for EC2 instances",

"OutputKey": "InstanceProfile",

"OutputValue": "myapp-dev-InstanceProfile-1KCQJP9M5TSVZ"

}

]

These CloudFormation Outputs list parameters that we will need to pass to the ElasticBeanstalk Environment creation during the next part of this walkthrough.

One final VPC note: IAM permissions for EC2 instance profiles

As a general rule of thumb, each AWS ElasticBanstalk Application Environment should be given its own IAM Instance Profile to use.

Each AWS EC2 instance should be allowed to assume an IAM role for an IAM instance profile that gives it access to the AWS cloud resources it must interface with.

This is accomplished by introspecting on AWS instance metadata. If you haven’t been exposed to this yet, I strongly recommend poking around at http://169.254.169.254 from your EC2 instances:

curl http://169.254.169.254/latest/meta-data/iam/security-credentials/role-myapp-dev

The JSON returned from that command allows an AWS library call with no credentials automatically obtain time-limited IAM STS credentials when run on AWS EC2 instances.

This avoids having to embed “permanent” IAM access/secret keys as environment variables that may “leak” over time to parties that shouldn’t have access.

Early on, we tried to do this as an ebextension in .ebextensions/00_iam.config, but this only works if the admin running the eb create has IAM permissions for the AWS account, and it appears impossible to change the launch InstanceProfile by defining option settings or overriding cloud resources in an ebextensions config file.

Instead, the VPC above generates an InstanceProfile that can be referenced later. More on that later in Part 2.

Stay tuned…

Today, I stumbled on the official CoreOS page on ECS.

I’ve been putting off ECS for a while, it was time to give it a try.

To create the ECS cluster, we will need the aws commandline tool:

which aws || pip install awscli

Make sure you have your AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY defined in your shell environment.

Create the ECS cluster:

aws ecs create-cluster --cluster-name Cosmos-Dev

{

"cluster": {

"clusterName": "Cosmos-Dev",

"status": "ACTIVE",

"clusterArn": "arn:aws:ecs:us-east-1:123456789012:cluster/My-ECS-Cluster"

}

}

Install the global fleet unit for amazon-ecs-agent.service:

cat <<EOF > amazon-ecs-agent.service

[Unit]

Description=Amazon ECS Agent

After=docker.service

Requires=docker.service

[Service]

Environment=ECS_CLUSTER=My-ECS-Cluster

Environment=ECS_LOGLEVEL=warn

Environment=AWS_REGION=us-east-1

ExecStartPre=-/usr/bin/docker kill ecs-agent

ExecStartPre=-/usr/bin/docker rm ecs-agent

ExecStartPre=/usr/bin/docker pull amazon/amazon-ecs-agent

ExecStart=/usr/bin/docker run --name ecs-agent \

--env=ECS_CLUSTER=${ECS_CLUSTER}\

--env=ECS_LOGLEVEL=${ECS_LOGLEVEL} \

--publish=127.0.0.1:51678:51678 \

--volume=/var/run/docker.sock:/var/run/docker.sock \

amazon/amazon-ecs-agent

ExecStop=/usr/bin/docker stop ecs-agent

[X-Fleet]

Global=true

EOF

fleetctl start amazon-ecs-agent.service

This registers a ContainerInstance to the My-ECS-Cluster in region us-east-1.

Note: this is using the EC2 instance’s instance profile IAM credentials. You will want to make sure you’ve assigned an instance profile with a Role that has “ecs:*” access. For this, you may want to take a look at the Amazon ECS Quickstart CloudFormation template.

Now from a CoreOS host, we can query locally to enumerate the running ContainerInstances in our fleet:

fleetctl list-machines -fields=ip -no-legend | while read ip ; do \

echo $ip $(ssh -n $ip curl -s http://169.254.169.254/latest/meta-data/instance-id) \

$(ssh -n $ip curl -s http://localhost:51678/v1/metadata | \

docker run -i realguess/jq jq .ContainerInstanceArn) ; \

done

Which returns something like:

10.113.0.23 i-12345678 "arn:aws:ecs:us-east-1:123456789012:container-instance/674140ae-1234-4321-1234-4abf7878caba"

10.113.1.42 i-23456789 "arn:aws:ecs:us-east-1:123456789012:container-instance/c3506771-1234-4321-1234-1f1b1783c924"

10.113.2.66 i-34567891 "arn:aws:ecs:us-east-1:123456789012:container-instance/75d30c64-1234-4321-1234-8be8edeec9c6"

And we can query ECS and get the same:

$ aws ecs list-container-instances --cluster My-ECS-Cluster | grep arn | cut -d'"' -f2 | \

xargs -L1 -I% aws ecs describe-container-instances --cluster My-ECS-Cluster --container-instance % | \

jq '.containerInstances[] | .ec2InstanceId + " " + .containerInstanceArn'

"i-12345678 arn:aws:ecs:us-east-1:123456789012:container-instance/674140ae-1234-4321-1234-4abf7878caba"

"i-23456789 arn:aws:ecs:us-east-1:123456789012:container-instance/c3506771-1234-4321-1234-1f1b1783c924"

"i-34567891 arn:aws:ecs:us-east-1:123456789012:container-instance/75d30c64-1234-4321-1234-8be8edeec9c6"

This ECS cluster is ready to use.

Unfortunately, there is no scheduler here. ECS is a harness for orchestrating docker containers in a cluster as tasks.

Where these tasks are allocated is left up to the AWS customer.

What we really need is a scheduler.

CoreOS has a form of a scheduler in fleet, but that is for fleet units of systemd services, and is not limited to docker containers as ECS is. Fleet’s scheduler is also currently a bit weak in that it schedules new units to the fleet machine with the fewest number of units.

Kubernetes has a random scheduler, which is better in a couple ways, but does not fairly allocate the system resources.

The best scheduler at present is Mesos, which takes into account resource sizing estimates and current utilization.

Normally, Mesos uses Mesos Slaves to run work. Mesos can also use ECS as a backend instead.

My next steps: Deploy Mesos using the ecs-mesos-scheduler-driver, as summarized by jpetazzo

A Dockerfile both describes a Docker image as well as layers for the working directory, environment variables, ports, entrypoint commands, and other important interfaces.

Test-Driven Design should drive a developer toward implementation details, not the other way around.

A devops without tests is a sad devops indeed.

Working toward a docker based development environment, my first thoughts were toward Serverspec by Gosuke Miayshita, as it is entirely framework agnostic. Gosuke gave an excellent presentation at ChefConf this year re-inforcing that Serverspec is not a chef centric tool, and works quite well in conjunction with other configuration management tools.

Researching Serverspec and docker a bit more, Taichi Nakashima based his TDD of Dockerfile by RSpec on OS/X using ssh.

With Docker 1.3 and later, there is a “docker exec” interactive docker API for allowing live sessions on processes spawned in the same process namespace as a running container, effectively allowing external access into a running docker container using only the docker API.

PIETER JOOST VAN DE SANDE posted about using the docker-api to accomplish the goal of testing a Dockerfile. His work is based on the docker-api gem (github swipely/docker-api).

Looking into the docker-api source, there is no support yet for docker 1.3’s exec API interface to run Serverspec tests against the contents of a running docker container.

Attempting even the most basic docker API calls with docker-api, issue 202 made it apparent that TLS support for boot2docker would need to be addressed first.

Here is my functional spec_helper.rb with the fixes necessary to use docker-api without modifications:

require "docker"

docker_host = ENV['DOCKER_HOST'].dup

if(ENV['DOCKER_TLS_VERIFY'])

cert_path = File.expand_path ENV['DOCKER_CERT_PATH']

Docker.options = {

client_cert: File.join(cert_path, 'cert.pem'),

client_key: File.join(cert_path, 'key.pem')

}

Excon.defaults[:ssl_ca_file] = File.join(cert_path, 'ca.pem')

docker_host.gsub!(/^tcp/,'https')

end

Docker.url = docker_host

Following this, I can drive the generation of a Dockerfile with a spec:

require "spec_helper"

describe "dockerfile built my_app image" do

before(:all) do

@image = Docker::Image.all(:all => true).find { |image|

Docker::Util.parse_repo_tag( image.info['RepoTags'].first ).first == 'my_app'

}

p @image.json["Env"]

end

it "should exist" do

expect(@image).not_to be_nil

end

it "should have CMD" do

expect(@image.json["Config"]["Cmd"]).to include("/run.sh")

end

it "should expose the default port" do

expect(@image.json["Config"]["ExposedPorts"].has_key?("3000/tcp")).to be_truthy

end

it "should have environmental variable" do

expect(@image.json["Config"]["Env"]).to include("HOME=/usr/src/app")

end

end

This drives me iteratively to write a Dockerfile that looks like:

FROM rails:onbuild

ENV HOME /usr/src/app

ADD docker/run.sh /run.sh

RUN chmod 755 /run.sh

EXPOSE 3000

CMD /run.sh

Next step: extend docker-api to support exec for serverspec based testing of actual docker image contents.

Sláinte!

Saturday’s project was installing OpenStack on a ChromeBox.

Step 0: Identify your hardware, add RAM

Before you begin, make sure you know which ChromeOS device you have.

In my case, it was a Samsung Series 3 Chromebox.

Thankfully, the memory was very easy to upgrade to 16G, as the bottom snaps right off.

Step 1: Make a ChromeOS recovery USB

Plug in a 4G or larger USB stick, then open this URL on your ChromeOS device:

Follow the instructions.

We shouldn’t need this, but you never know. (And, yes, I did end up needing it during one of my iterations while writing this post).

Step 2: Enable developer mode

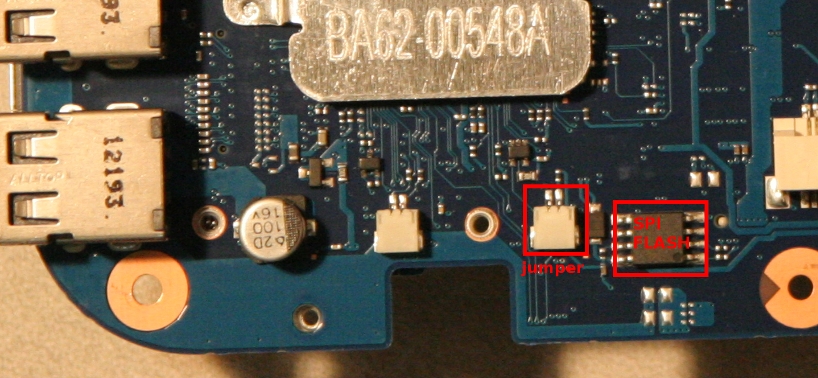

The switch in the image is how I put my ChromeBox into development mode.

After flipping the switch, reboot.

On this first reboot, the existing on-board storage will be wiped entirely, erasing any account credentials and cached content.

Step 3: Login to the console as “chronos”

Using cntl-alt-F2, enter the username “chronos” at the login: prompt. Hit return at the password: prompt (the default chronos password is an empty string).

Note: You did not actually need to login to google via the UI interface.

Step 4: Enable USB and SeaBIOS booting

Now that you have a shell as chronos, you can enable USB booting.

sudo crossystem dev_boot_usb=1

After enabling and rebooting, you can now boot from USB drives with cntl-u

In order to install Ubuntu (or your distro of choice), we need to legacy boot. This requires a BIOS.

Newer ChromeBox hardware includes SeaBIOS natively.

sudo crossystem dev_boot_legacy=1

After enabling and rebooting, you can now boot to legacy images with cntl-l

If you have an older ChromeBox (like the Samsung Series 3) that doesn’t have a SeaBIOS boot, you will need to flash one.

Flashing a new bootstrap requires a jumper or other physical change to hardware to allow the flashrom tool to write to flash.

NOTE: ASSUME THAT THIS WILL LIKELY BRICK YOUR CHROMEBOX. YOU HAVE BEEN WARNED

On the Samsung Series 3 ChromeBox, the jumper looks like this:

A bit of folded tin-foil made for a quick jumper.

John Lewis is maintaining a marvelous script that auto-detects and flashes an updated SeaBIOS for most ChromeBook/ChromeBox hardware:

wget https://johnlewis.ie/getnflash_johnlewis_rom.sh

chmod u+x getnflash_johnlewis_rom.sh

./getnflash_johnlewis_rom.sh

The script makes you type “If this bricks my Chromebook/box, on my head be it!” to make sure you understand that you are most likely going to brick your chromebox/chromebook by proceeding. This is no joke.

Being ok with potentially bricking my ChromeBox, I went ahead.

The script ran to completion without errors, and was thoroughly successful.

After rebooting, I now get a SeaBIOS splash identification (rather than the eventual sick computer).

The downside to doing this is that I now must boot off of an external USB device, as the SeaBIOS doesn’t seem to support booting from the built-in MMC SSD anymore.

Step 5: Install your Linux distribution

I went ahead and pulled an Ubuntu Trust 14.04 ISO and DD’ed it to a USB stick on my Mac.

wget -c http://releases.ubuntu.com/14.04/ubuntu-14.04-desktop-amd64.iso

diskutil list

hdiutil unmount /Volumes/USBSTICK

sudo dd if=ubuntu-14.04-desktop-amd64.iso of=/dev/disk5 bs=1m

After it finished flashing, I removed the USB stick from my Mac and plugged it into the front of the ChromeBox.

The USB installation media for Ubuntu was detected by SeaBIOS as the second bootable USB device.

I also attached 2 external 1TB USB disks to the back as the media that will be installed to. These appeared as the third and fourth bootable devices to SeaBIOS.

With my new SeaBIOS bootstrap, I now must hit “Esc” and “2” to boot off of the first USB stick for the Ubuntu installation.

This presented me with the Ubunu boot menu.

Beyond this point, I installed Ubuntu to the two external 1TB USB disks, with a primary boot partition (type “83”) on each for /boot as normal linux ext4, and a primary RAID partition (type “fd”) on each for the RAID1 mirror upon which I layered LVM with a volume group named “vg” and a “rootfs” and a “swapfs” logical volume. At the end, I installed the grub boot sector to /dev/sdb and /dev/sdc (the two external 1TB USB drives).

After removing the USB stick for the Ubuntu installation media, the SeaBIOS entries shifted by 1.

With my new SeaBIOS bootstrap, I now must hit “Esc” and “2” to boot off of the first USB 1TB drive, or “3” for the second USB 1TB drive.

When I figure out how to get around the SeaBIOS hang on boot if I don’t do this, I will update this blog post.

Step 4: Devstack installation of OpenStack

From this point forward, I followed the DevStack All-in-one single-machine install guide.

My local.conf for the all-in-one install is a collection of bits and pieces collected while digging around:

[[local|localrc]]

SERVICE_TOKEN=a682f596-76f3-11e3-b3b2-e716f9080d50

ADMIN_PASSWORD=nomoresecrets

MYSQL_PASSWORD=iheartdatabases

RABBIT_PASSWORD=flopsymopsy

SERVICE_PASSWORD=$ADMIN_PASSWORD

#HOST_IP=10.0.0.106

DEST=/opt/stack

LOGFILE=$DEST/logs/stack.sh.log

LOGDAYS=7

#LOG_COLOR=False

# Uncomment these to grab the milestone-proposed branches from the repos:

#CINDER_BRANCH=milestone-proposed

#GLANCE_BRANCH=milestone-proposed

#HORIZON_BRANCH=milestone-proposed

#KEYSTONE_BRANCH=milestone-proposed

#KEYSTONECLIENT_BRANCH=milestone-proposed

#NOVA_BRANCH=milestone-proposed

#NOVACLIENT_BRANCH=milestone-proposed

#NEUTRON_BRANCH=milestone-proposed

#SWIFT_BRANCH=milestone-proposed

SWIFT_HASH=66a3d6b56c1f479c8b4e70ab5c2000f5

SWIFT_REPLICAS=1

SWIFT_DATA_DIR=$DEST/data/swift

FIXED_RANGE=10.1.0.0/24

FLOATING_RANGE=10.2.0.0/24

FIXED_NETWORK_SIZE=256

PUBLIC_INTERFACE=eth0

NET_MAN=FlatDHCPManager

FLAT_NETWORK_BRIDGE=br100

SCREEN_LOGDIR=$DEST/logs/screen

VOLUME_GROUP="vg"

VOLUME_NAME_PREFIX="cinder-"

VOLUME_BACKING_FILE_SIZE=10250M

API_RATE_LIMIT=False

VIRT_DRIVER=libvirt

LIBVIRT_TYPE=kvm

#SCHEDULER=nova.scheduler.filter_scheduler.FilterScheduler

As the stack user, running ./stack.sh kicked off the install, and it completed successfully.

At the end, it tells you the URLs to use to access your new OpenStack server:

Horizon is now available at http://10.0.0.106/

Keystone is serving at http://10.0.0.106:5000/v2.0/

Examples on using novaclient command line is in exercise.sh

The default users are: admin and demo

The password: nomoresecrets

This is your host ip: 10.0.0.106

I also ended up creating this ~/.fog file locally on my Mac, based on CloudFoundry’s guide to validating your OpenStack.

:openstack:

:openstack_auth_url: http://10.0.0.106:5000/v2.0/tokens

:openstack_api_key: nomoresecrets

:openstack_username: admin

:openstack_tenant: admin

:openstack_region: RegionOne # Optional

With it, I can now use the fog command-line tool locally on my development Mac to manipulate the ChromeBox based OpenStack server.

Cheers!

A common devops problem when developing Docker containers is managing the orchestration of multiple containers in a development environment.

There are a number of orchestration harnesses for Docker available:

- Docker’s Fig

- blockade

- Vagrant

- kubernetes

- maestro-ng

- crane

- Centurylink’s Panamax

- Shipyard

- Decking

- NewRelic’s Centurion

- Spotify’s Helios

- Stampede

- Chef

- Ansible

- Flynn

- Shipper

- Octohost

- Tsuru with docker-cluster

- Flocker

- CloudFocker

- Clocker and Apache Brooklyn

- CloudFoundry’s docker-boshrelease/diego

- Mesosphere Deimos

- Deis (a PaaS that can git push deploy containers using Heroku buildpacks or a Dockerfile)

There are a number of hosted service offerings now as well:

- Amazon ECS

- CloudShell

- ElasticBox

- Aw, heck, just check the docker ecosystem mindmap

There are also RAFT/GOSSIP clustering solutions like:

My coreos-vagrant-kitchen-sink github project submits cloud-init units via a YAML file when booting member nodes. It’s a good model for production, but it’s a bit heavy for development.

Docker is currently working on Docker Clustering, but it is presently just a proof-of-concept and is now under a total re-write.

They are also implementing docker composition which provides Fig like functionality using upcoming docker “groups”.

That influence of Fig makes sense, as Docker bought Orchard.

Internally, Docker developers use Fig.

Docker’s website also directs everyone to Boot2Docker, as that is the tool Docker developers use as their docker baseline environment.

Boot2Docker spawns a VirtualBox based VM as well as a native docker client runtime on the developer’s host machine, and provides the DOCKER_HOST and related enviroments necessary for the client to talk to the VM.

This allows a developer’s Windows or OS/X machine to have a docker command that behaves as if the docker containers are running natively on their host machine.

While Fig is easy to install under OS/X as it has native Python support (“pip install fig”), installing Fig on a Windows developer workstation would normally require Python support be installed separately.

Rather than do that, I’ve built a new ianblenke/fig-docker docker Hub image, which is auto-built from ianblenke/docker-fig-docker on github.

This allows running fig inside a docker container using:

docker run -v $(pwd):/app \

-v $DOCKER_CERT_PATH:/certs \

-e DOCKER_CERT_PATH=/certs \

-e DOCKER_HOST=$DOCKER_HOST \

-e DOCKER_TLS_VERIFY=$DOCKER_TLS_VERIFY \

-ti --rm ianblenke/fig-docker fig --help

Alternatively, a developer can alias it:

alias fig="docker run -v $(pwd):/app \

-v $DOCKER_CERT_PATH:/certs

-e DOCKER_CERT_PATH=/certs \

-e DOCKER_HOST=$DOCKER_HOST \

-e DOCKER_TLS_VERIFY=$DOCKER_TLS_VERIFY \

-ti --rm ianblenke/fig-docker fig"

Now the developer can run fig as if it is running on their development host, continuing the boot2docker illusion.

In the above examples, the current directory $(pwd) is being mounted as /app inside the docker container.

On a boot2docker install, the boot2docker VM is the actual source of that volume path.

That means you would actually have to have the current path inside the boot2docker VM as well.

To do that, on a Mac, do this:

boot2docker down

VBoxManage sharedfolder add boot2docker-vm -name home -hostpath /Users

boot2docker up

From this point forward, until the next boot2docker init, your boot2docker VM should have your home directory mounted as /Users and the path should be the same.

A similar trick happens for Windows hosts, providing the same path inside the boot2docker VM as a developer would use.

This allows a normalized docker/fig interface for developers to begin their foray into docker orchestration.

Let’s setup a very quick Ruby on Rails application from scratch, and then add a Dockerfile and fig.yml that spins up a mysql service for it to talk to.

Here’s a quick script that does just that. The only requirement is a functional docker command able to spin up containers.

#!/bin/bash

set -ex

# Source the boot2docker environment variables

eval $(boot2docker shellinit 2>/dev/null)

# Use a rails container to create a new rails project in the current directory called figgypudding

docker run -it --rm -v $(pwd):/app rails:latest bash -c 'rails new figgypudding; cp -a /figgypudding /app'

cd figgypudding

# Create the Dockerfile used to build the figgypudding_web:latest image used by the figgypudding_web_1 container

cat <<EOD > Dockerfile

FROM rails:onbuild

ENV HOME /usr/src/app

EOD

# This is the Fig orchestration configuration

cat <<EOF > fig.yml

mysql:

environment:

MYSQL_ROOT_PASSWORD: supersecret

MYSQL_DATABASE: figgydata

MYSQL_USER: figgyuser

MYSQL_PASSWORD: password

ports:

- "3306:3306"

image: mysql:latest

figgypudding:

environment:

RAILS_ENV: development

DATABASE_URL: mysql2://figgyuser:password@172.17.42.1:3306/figgydata

links:

- mysql

ports:

- "3000:3000"

build: .

command: bash -xc 'bundle exec rake db:migrate && bundle exec rails server'

EOF

# Rails defaults to sqlite, convert it to use mysql

sed -i -e 's/sqlite3/mysql2/' Gemfile

# Update the Gemfile.lock using the rails container we referenced earlier

docker run --rm -v $(pwd):/usr/src/app -w /usr/src/app rails:latest bundle update

# Use the fig command from my fig-docker container to fire up the Fig formation

docker run -v $(pwd):/app -v $DOCKER_CERT_PATH:/certs \

-e DOCKER_CERT_PATH=/certs \

-e DOCKER_HOST=$DOCKER_HOST \

-e DOCKER_TLS_VERIFY=$DOCKER_TLS_VERIFY \

-ti --rm ianblenke/fig-docker fig up

After running that, there should now be a web server running on the boot2docker VM, which should generally be http://192.168.59.103:3000/ as that seems to be the common boot2docker default IP.

This is fig, distilled to its essence.

Beyond this point, a developer can “fig build ; fig up” and see the latest result of their work. This is something ideally added as a git post-commit hook or a iteration harness like Guard.

While it may not appear pretty at first glance, realize that only cat, and sed were used on the host here (and very well could also themselves have also been avoided). No additional software was installed on the host, yet a rails app was created and deployed in docker containers, talking to a mysql server.

And therein lies the elegance of dockerizing application deployment: simple, clean, repeatable units of software. Orchestrated.

Have fun!

Self-standing Ceph/deis-store docker containers

Wed Nov 5, 2014 in docker , boot2docker , ceph , deis , aws , s3 , orchestration using tagsA common challenge for cloud orchestration is simulating or providing an S3 service layer, particularly for development environments.

As Docker is meant for immutable infrastructure, this poses somewhat of a challenge for production deployments. Rather than tackle that subject here, we’ll revisit persistence on immutable infrastructure in a production capacity in a future blog post.

The first challenge is identifying an S3 implementation to throw into a container.

There are a few feature sparse/dummy solutions that might suit development needs:

- s3-ninja (github scireum/s3ninja)

- fake-s3

- S3Mockup (and a number of others which I’d rather not even consider)

There are a number of good functional options for actual S3 implementations:

- ceph (github ceph/ceph), specifically the radosgw

- walrus from Eucalyptus

- riak cs

- libres3, backended by the opensource Skylable Sx

- cumulus is an S3 implementation for Nimbus

- cloudian which is a non-opensource commercial product

- swift3 as an S3 compatibility layer with swift on the backend

- vblob a node.js based attic’ed project at CloudFoundry

- parkplace backended by bittorrent

- boardwalk backended by ruby, sinatra, and mongodb

Of the above, one stands out as the underlying persistence engine used by a larger docker backended project: Deis

Rather than re-invent the wheel, it is possible to use deis-store directly.

As Deis deploys on CoreOS, there is an understandable inherent dependency on etcd for service discovery.

If you happen to be targeting CoreOS, you can simply point your etcd –peers option or ETCD_HOST environment variable at $COREOS_PRIVATE_IPV4 and skip this next step.

First, make sure your environment includes the DOCKER_HOST and related variables for the boot2docker environment:

eval $(boot2docker shellinit)

Now, discover the IP of the boot2docker guest VM, as that is what we will bind the etcd to:

IP="$(boot2docker ip 2>/dev/null)"

Next, we can spawn etcd and publish the ports for the other containers to use:

docker run --name etcd \

--publish 4001:4001 \

--publish 7001:7001 \

--detach \

coreos/etcd:latest \

/go/bin/app -listen-client-urls http://0.0.0.0:4001 \

-advertise-client-urls http://$IP:4001 \

-listen-peer-urls http://0.0.0.0:7001 \

-initial-advertise-peer-urls http://$IP:7001 \

-data-dir=/tmp/etcd

Normally, we wouldn’t put the etcd persistence in a tmpfs for consistency reasons after a reboot, but for a development container: we love speed!

Now that we have an etcd container running, we can spawn the deis-store daemon container that runs the ceph object-store daemon (OSD) module.

docker run --name deis-store-daemon \

--volumes-from=deis-store-daemon-data \

--env HOST=$IP \

--publish 6800 \

--net host \

--detach \

deis/store-daemon:latest

It is probably a good idea to mount the /var/lib/deis/store volume for persistence, but this is a developer container, so we’ll forego that step.

The ceph-osd will wait in a loop when starting until it can talk to ceph-mon, which is the next component provided by the deis-store monitor container.

In order to prepare the etcd config tree for deis-store monitor, we must first set a key for this new deis-store-daemon component.

While we could do that with a wget/curl PUT to the etcd client port (4001), using etcdctl makes things a bit easier.

It is generally a good idea to match the version of the etcdctl client with the version of etcd you are using.

As the CoreOS team doesn’t put out an etcdctl container as of yet, one way to do this is to build/install etcdctl inside a coreos/etcd container:

docker run --rm \

coreos/etcd \

/bin/sh -c "cd /go/src/github.com/coreos/etcd/etcdctl; \

go install ; \

/go/bin/etcdctl --peers $IP:4001 \

set /deis/store/hosts/$IP $IP"

This isn’t ideal, of course, as there is a slight delay as etcdctl is built and installed before we use it, but it serves the purpose.

There are also deis/store-daemon settings of etcd keys that customize the behavior of ceph-osd a bit.

Now we can start deis-store-monitor, which will use that key to spin up a ceph-mon that monitors this (and any other) ceph-osd instances likewise registered in the etcd configuration tree.

docker run --name deis-store-monitor \

--env HOST=$IP \

--publish 6789 \

--net host \

--detach \

deis/store-monitor:latest

As before, there are volumes that probably should be mounted for /etc/ceph and /var/lib/ceph/mon, but this is a development image, so we’ll skip that.

There are also deis/store-monitor settings of etcd keys that customize the behavior of ceph-mon a bit.

Now that ceph-mon is running, ceph-osd will continue starting up. We now have a single-node self-standing ceph storage platform, but no S3.

The S3 functionality is provided by the ceph-radosgw component, which is provided by the deis-store-gateway container.

docker run --name deis-store-gateway \

--hostname deis-store-gateway \

--env HOST=$IP \

--env EXTERNAL_PORT=8888 \

--publish 8888:8888 \

--detach \

deis/store-gateway:latest

There is no persistence in ceph-radosgw that warrant a volume mapping, so we can ignore that entirely regardless of environment.

There are also deis/store-gateway settings of etcd keys that customize the behavior of ceph-radosgw a bit.

We now have a functional self-standing S3 gateway, but we don’t know the credentials to use it. For that, we can run etcdctl again:

AWS_ACCESS_KEY_ID=$(docker run --rm coreos/etcd /bin/sh -c "cd /go/src/github.com/coreos/etcd/etcdctl; go install ; /go/bin/etcdctl --peers $IP:4001 get /deis/store/gateway/accessKey")

AWS_SECRET_ACCESS_KEY=$(docker run --rm coreos/etcd /bin/sh -c "cd /go/src/github.com/coreos/etcd/etcdctl; go install ; /go/bin/etcdctl --peers $IP:4001 get /deis/store/gateway/secretKey")

AWS_S3_HOST=$(docker run --rm coreos/etcd /bin/sh -c "cd /go/src/github.com/coreos/etcd/etcdctl; go install ; /go/bin/etcdctl --peers $IP:4001 get /deis/store/gateway/host")

AWS_S3_PORT=$(docker run --rm coreos/etcd /bin/sh -c "cd /go/src/github.com/coreos/etcd/etcdctl; go install ; /go/bin/etcdctl --peers $IP:4001 get /deis/store/gateway/port")

Note that the host here isn’t the normal AWS gateway address, so you will need to specify things for your S3 client to access it correctly.

Likewise, you may need to specify an URL scheme of “http”, as the above does not expose an HTTPS encrypted port.

There are also S3 client changes that may be necessary depending on the “calling format” of the client libraries. You may need to changes things like paperclip to work with fog. There are numerous tools that work happily with ceph, like s3_to_ceph and even gems like fog-radosgw that try and help make this painless for your apps.

I will update this blog post shortly with an example of a containerized s3 client to show how to prove your ceph radosgw is working.

Have fun!

Boot2Docker command-line

Preface: the boot2docker README is a great place to discover the below commands in more detail.

Now that we have Boot2Docker installed, we need to initialize a VM instance

boot2docker init

This merely defines the default boot2docker VM, it does not start it. To do that, we need to bring it “up”

boot2docker up

When run, it looks something like this:

icbcfmbp:~ icblenke$ boot2docker up

Waiting for VM and Docker daemon to start...

..........ooo

Started.

Writing /Users/icblenke/.boot2docker/certs/boot2docker-vm/ca.pem

Writing /Users/icblenke/.boot2docker/certs/boot2docker-vm/cert.pem

Writing /Users/icblenke/.boot2docker/certs/boot2docker-vm/key.pem

To connect the Docker client to the Docker daemon, please set:

export DOCKER_CERT_PATH=/Users/icblenke/.boot2docker/certs/boot2docker-vm

export DOCKER_TLS_VERIFY=1

export DOCKER_HOST=tcp://192.168.59.103:2376

icbcfmbp:~ icblenke$

This is all fine and dandy, but that shell didn’t actually source those variables. To do that we use boot2docker shellinit:

eval $(boot2docker shellinit)

Now the shell has those variables exported for the running boot2docker VM.

The persistence of the boot2docker VM lasts only until we run a boot2docker destroy

boot2docker destroy

After doing this, there is no longer a VM defined. We would need to go back to the boot2docker init step above and repeat.

Docker command-line

From this point forward, we use the docker command to interact with the boot2docker VM as if we are on a linux docker host.

The docker command is just a compiled go application that makes RESTful calls to the docker daemon inside the linux VM.

bash-3.2$ docker info

Containers: 0

Images: 0

Storage Driver: aufs

Root Dir: /mnt/sda1/var/lib/docker/aufs

Dirs: 0

Execution Driver: native-0.2

Kernel Version: 3.16.4-tinycore64

Operating System: Boot2Docker 1.3.1 (TCL 5.4); master : 9a31a68 - Fri Oct 31 03:14:34 UTC 2014

Debug mode (server): true

Debug mode (client): false

Fds: 10

Goroutines: 11

EventsListeners: 0

Init Path: /usr/local/bin/docker

This holds true for both OS/X and Windows.

The boot2docker facade is just a handy wrapper to prepare the guest linux host VM for the docker daemin and local docker command-line client for your development host OS environment.

And now you have a starting point for exploring Docker!

Starting with a new team of developers, it helps to document the bootstrapping steps to a development environment.

Rather than try and use a convergence tool like Chef, Puppet, Ansible, or SALT, this time the environment will embrace Docker.

We could use a tool like Vagrant, but we need to support both Windows and Mac development workstations, and Vagrant under Windows can be vexing.

For this, we will begin anew using Boot2Docker

Before we begin, be sure to install VirtualBox from Oracle’s VirtualBox.org website

The easiest way to install VirtualBox is to use HomeBrew Cask under HomeBrew

brew install caskroom/cask/brew-cask

brew cask install virtualbox

The easiest way to install boot2docker is to use HomeBrew

brew install boot2docker

Afterward, be sure to upgrade the homebrew bottle to the latest version of boot2docker:

boot2docker upgrade

Alternatively, a sample commandline install of boot2docker might look like this:

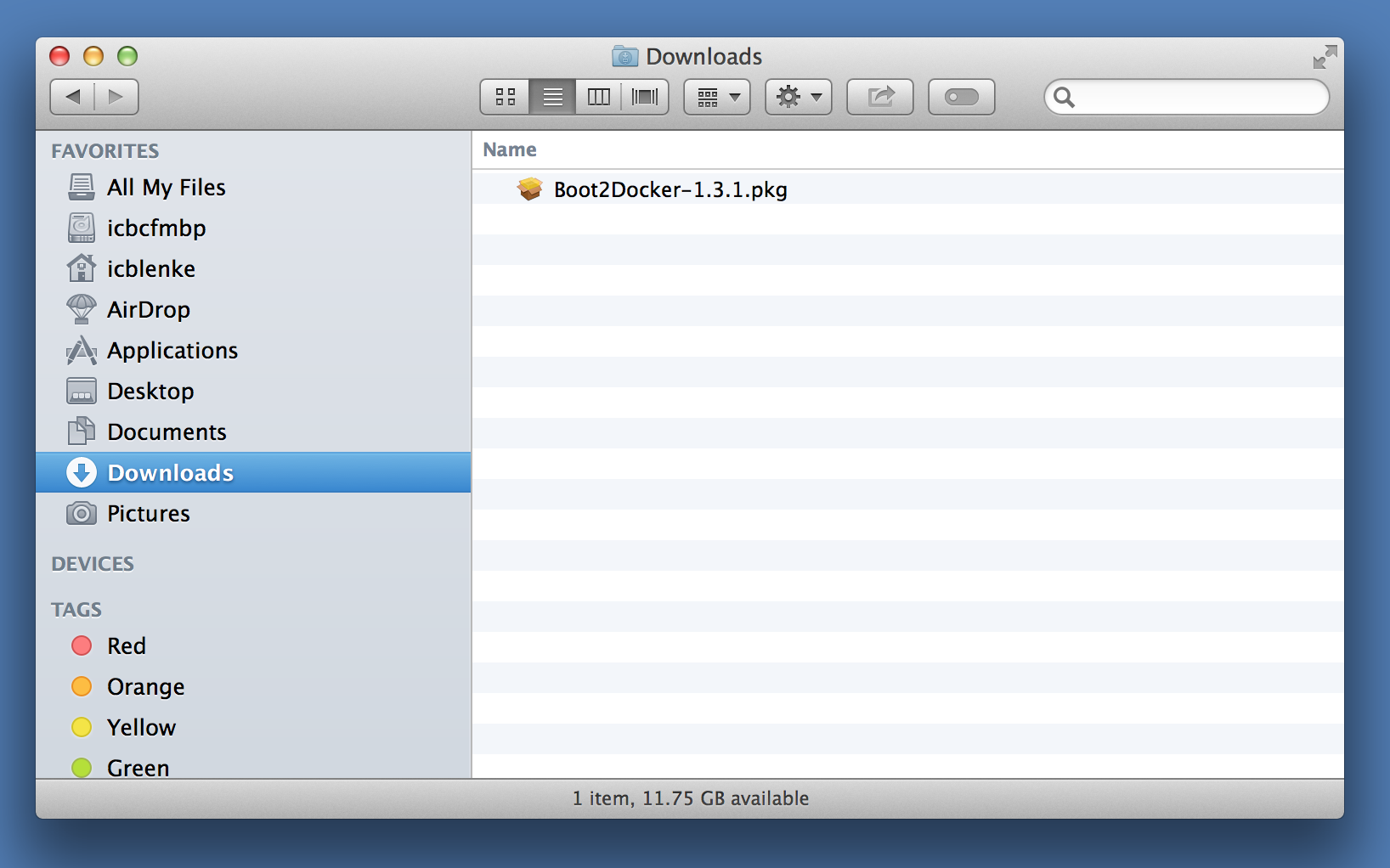

wget https://github.com/boot2docker/osx-installer/releases/download/v1.3.1/Boot2Docker-1.3.1.pkg

sudo installer -pkg ~/Downloads/Boot2Docker-1.3.1.pkg -target /

I’ll leave the commandline install of VirtualBox up to your imagination. With HomeBrew Cask, there’s really not much of a point.

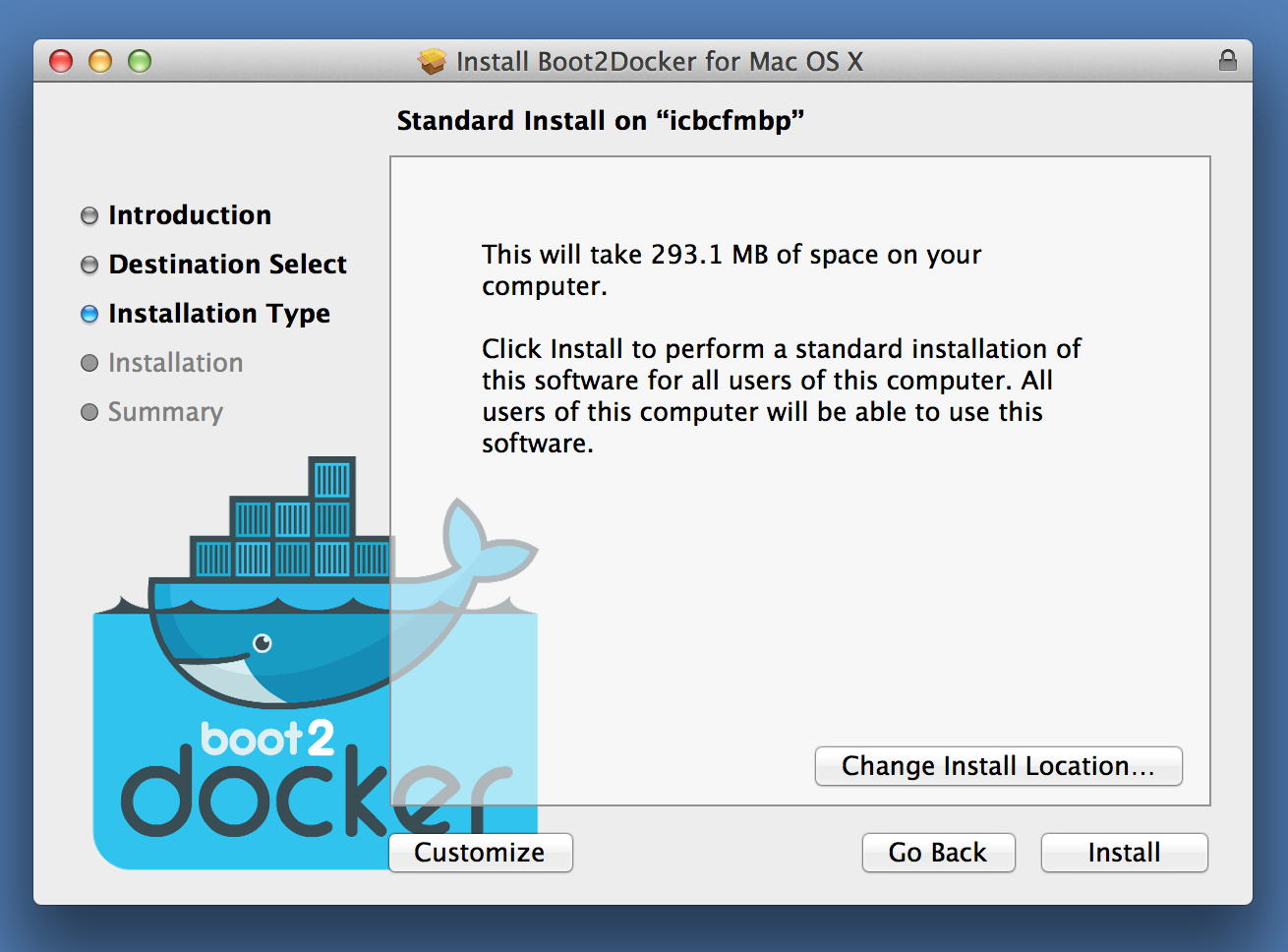

If you’re still not comfortable, below is a pictoral screenshot guide to installing boot2docker the point-and-click way.

Step 0

Download boot2docker for OS/X or boot2docker for Windows

Step 1

Run the downloaded Boot2Docker.pkg or docker-install.exe to kick off the installer.

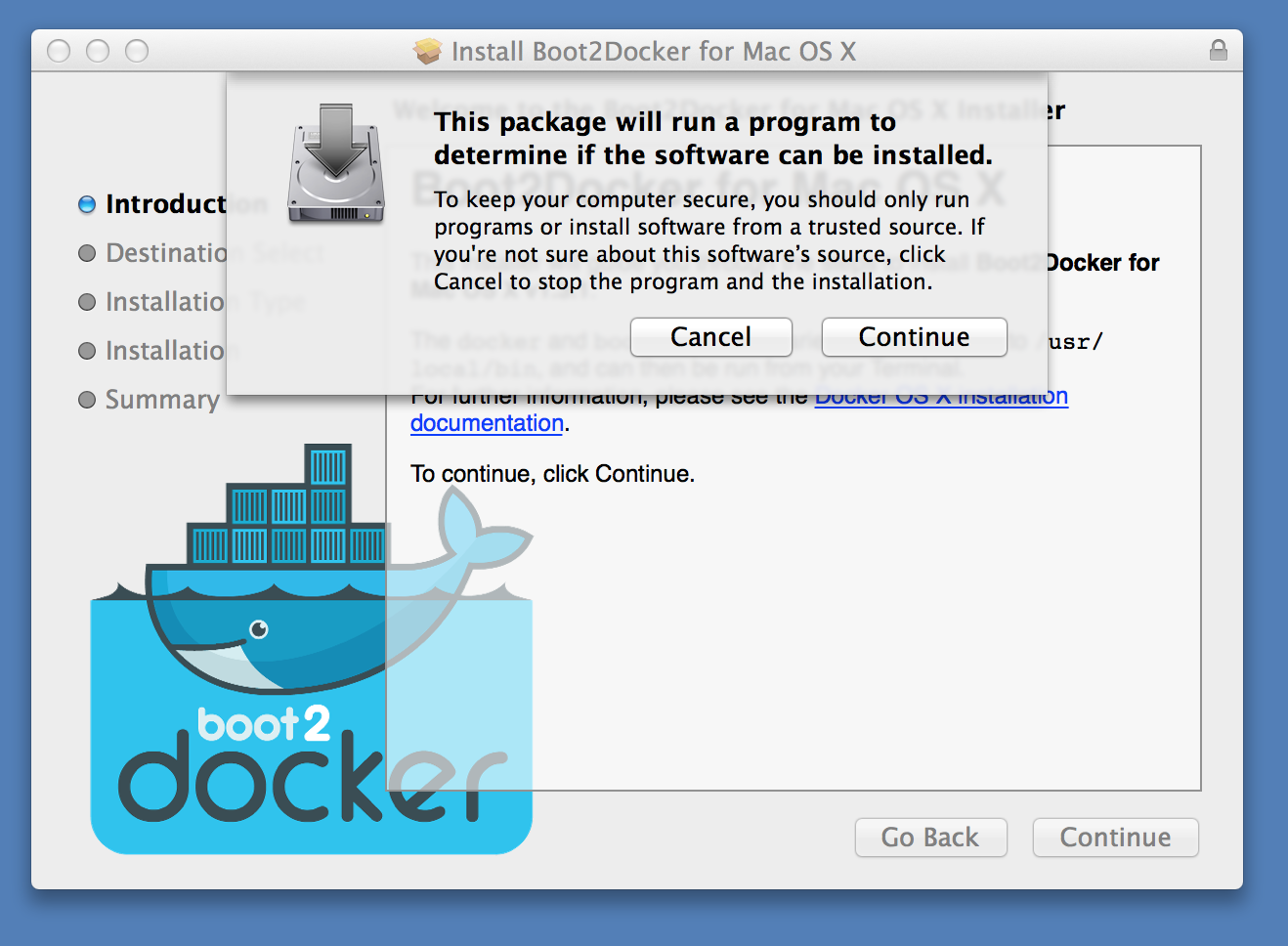

Step 2

Click the Continue button to allow the installer to run a program to detect if boot2docker can be installed.

Step 3

Click the Continue button to proceed beyond the initial installation instructions dialog.

Step 4

The installer will now ask for an admin username/password to obtain admin rights to install boot2docker.

Step 5

Before installing, the installer will advise how much space the install will take. Click the Install button to start the actual install.

Step 6

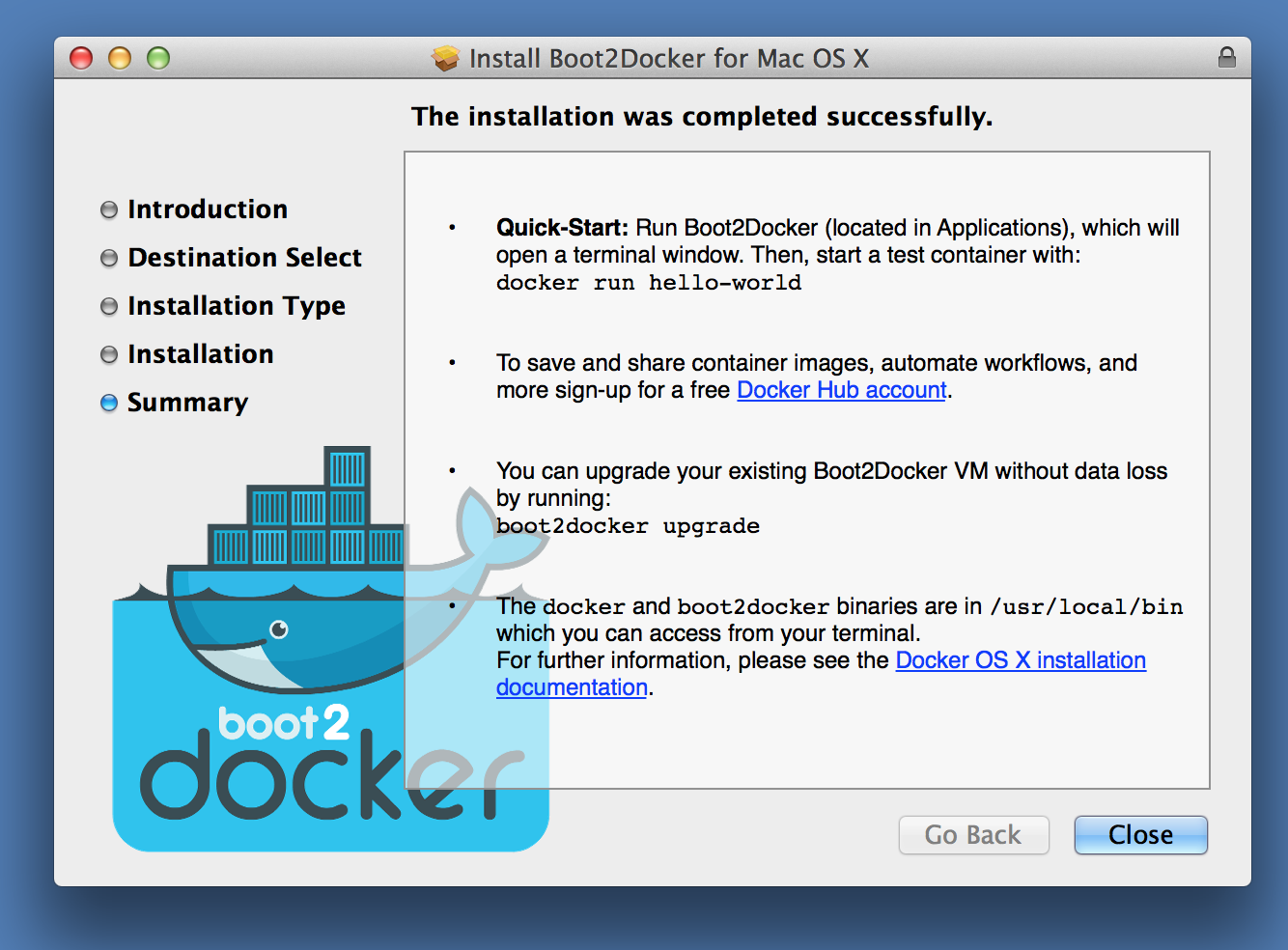

When the installation is successfully, click the Close button to exit the installer.

Step 7

You now have a shiny icon for boot2docker in /Applications you can click on to start a boot2docker terminal window session.

Congrats. You now have boot2docker installed.

Here is a link to my Tampa Bay Barcamp 2014 presentation slides for Immutable Infrastructure Persistence